我们如何使用 mediaRecorder 将画布流与音频流混合

时间:2023-06-21问题描述

我有一个使用 canvas.captureStream() 的画布流.我有来自 webrtc 视频通话的另一个视频流.现在我想将画布流与视频流的音轨混合.我该怎么做?

I have a canvas stream using canvas.captureStream(). I have another video stream from webrtc video call. Now i want to mix canvas stream with audio tracks of the video stream.How can i do that?

推荐答案

使用 MediaStream 构造函数 在 Firefox 和 Chrome 56 中可用,用于将轨道组合成一个新流:

Use the MediaStream constructor available in Firefox and Chrome 56, to combine tracks into a new stream:

let stream = new MediaStream([videoTrack, audioTrack]);

以下内容在 Firefox 中对我有用(在 Chrome 中使用 https fiddle,尽管在录制时会出错):

The following works for me in Firefox (Use https fiddle in Chrome, though it errors on recording):

navigator.mediaDevices.getUserMedia({audio: true})

.then(stream => record(new MediaStream([stream.getTracks()[0],

whiteNoise().getTracks()[0]]), 5000)

.then(recording => {

stop(stream);

video.src = link.href = URL.createObjectURL(new Blob(recording));

link.download = "recording.webm";

link.innerHTML = "Download recording";

log("Playing "+ recording[0].type +" recording:");

})

.catch(log))

.catch(log);

var whiteNoise = () => {

let ctx = canvas.getContext('2d');

ctx.fillRect(0, 0, canvas.width, canvas.height);

let p = ctx.getImageData(0, 0, canvas.width, canvas.height);

requestAnimationFrame(function draw(){

for (var i = 0; i < p.data.length; i++) {

p.data[i++] = p.data[i++] = p.data[i++] = Math.random() * 255;

}

ctx.putImageData(p, 0, 0);

requestAnimationFrame(draw);

});

return canvas.captureStream(60);

}

var record = (stream, ms) => {

var rec = new MediaRecorder(stream), data = [];

rec.ondataavailable = e => data.push(e.data);

rec.start();

log(rec.state + " for "+ (ms / 1000) +" seconds...");

var stopped = new Promise((y, n) =>

(rec.onstop = y, rec.onerror = e => n(e.error || e.name)));

return Promise.all([stopped, wait(ms).then(_ => rec.stop())]).then(_ => data);

};

var stop = stream => stream.getTracks().forEach(track => track.stop());

var wait = ms => new Promise(resolve => setTimeout(resolve, ms));

var log = msg => div.innerHTML += "<br>" + msg;<div id="div"></div><br>

<canvas id="canvas" width="160" height="120" hidden></canvas>

<video id="video" width="160" height="120" autoplay></video>

<a id="link"></a>这篇关于我们如何使用 mediaRecorder 将画布流与音频流混合的文章就介绍到这了,希望我们推荐的答案对大家有所帮助,也希望大家多多支持跟版网!

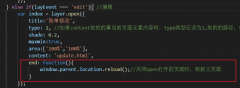

layer.open打开的页面关闭时,父页面刷新的方法layer.open打开的页面关闭时,父页面刷新的方法,在layer.open中添加: end: function(){ window.parent.location.reload();//关闭open打开的页面时,刷新父页面 }

layer.open打开的页面关闭时,父页面刷新的方法layer.open打开的页面关闭时,父页面刷新的方法,在layer.open中添加: end: function(){ window.parent.location.reload();//关闭open打开的页面时,刷新父页面 }