有没有一种更简单(并且仍然有效)的方法来通过最近邻重采样来放大画布渲染?

时间:2023-06-20问题描述

我对这个以最近邻格式放大画布渲染的看似简单的任务感到有些困惑,我在这里问过这个问题:

像这样的像素艺术:

但在那个问题中,我问的是如何正确实施我选择的解决方案(本质上使用着色器来处理放大).也许我应该问:有没有更简单(并且仍然高效)的方法来做到这一点?

我可以提供两种方法,可以使用最近邻有效地放大或缩小图像.

要手动执行此操作,您应该遍历新缩放图像的每个像素,并使用旧尺寸与新尺寸的比率计算它们应该使用原始像素的哪个像素.

(我的代码片段使用 .toDataURL() 因此它们可能无法在 chrome 中运行.)

<!doctype html><html><头><meta charset="utf-8"><风格>#输入 {显示:无;}身体 {背景颜色:黑色;}身体>* {显示:块;边距顶部:10px;左边距:自动;边距右:自动;}图像{背景颜色:灰色;边框:纯色 1px 白色;边框半径:10px;图像渲染:优化速度;}标签 {过渡:0.1s;光标:指针;文本对齐:居中;字体大小:15px;-webkit-touch-callout:无;-webkit 用户选择:无;-khtml-用户选择:无;-moz 用户选择:无;-ms 用户选择:无;用户选择:无;宽度:130px;高度:40px;行高:40px;边框半径:10px;白颜色;背景颜色:#005500;盒子阴影:0px 4px #555555;}标签:悬停{背景颜色:#007700;}标签:活动{盒子阴影:0px 1px #555555;变换:translateY(3px);}脚本 {显示:无;}</风格></头><身体><img id="unscaledImage"></img><img id="scaledImage"></img><input id="scale" type="range" min="1" max="100" value="50"></input><label for="input">上传图片</label><input id="input" type="file"></input><脚本类型="应用程序/javascript">无效函数(){使用严格";var unscaledImage = null;var scaledImage = null;变量比例=空;var输入=空;变量画布=空;var ctx = null;var hasImage = false;函数 scaleImage(img,scale) {var newWidth = (img.width * scale) |0;var newHeight = (img.height * scale) |0;canvas.width = img.width;canvas.height = img.height;ctx.drawImage(img,0,0);var unscaledData = ctx.getImageData(0,0,img.width,img.height);var scaledData = ctx.createImageData(newWidth,newHeight);var unscaledBitmap = unscaledData.data;var scaledBitmap = scaledData.data;var xScale = img.width/newWidth;var yScale = img.height/newHeight;for (var x = 0; x < newWidth; ++x) {for (var y = 0; y < newHeight; ++y) {var _x = (x * xScale) |0;var _y = (y * yScale) |0;var scaledIndex = (x + y * newWidth) * 4;var unscaledIndex = (_x + _y * img.width) * 4;scaledBitmap[scaledIndex] = unscaledBitmap[unscaledIndex];scaledBitmap[scaledIndex + 1] = unscaledBitmap[unscaledIndex + 1];scaledBitmap[scaledIndex + 2] = unscaledBitmap[unscaledIndex + 2];scaledBitmap[scaledIndex + 3] = 255;}}ctx.clearRect(0,0,canvas.width,canvas.height);canvas.width = newWidth;canvas.height = newHeight;ctx.putImageData(scaledData,0,0);返回画布.toDataURL();}函数 onImageLoad() {URL.revokeObjectURL(this.src);scaledImage.src = scaleImage(this,scale.value * 0.01);scaledImage.style.width = this.width + "px";scaledImage.style.height = this.height + "px";有图像=真;}函数 onImageError() {URL.revokeObjectURL(this.src);}函数 onScaleChanged() {如果(hasImage){scaledImage.src = scaleImage(unscaledImage,this.value * 0.01);}}函数 onImageSelected() {如果(this.files[0]){unscaledImage.src = URL.createObjectURL(this.files[0]);}}加载=函数(){unscaledImage = document.getElementById("unscaledImage");scaledImage = document.getElementById("scaledImage");scale = document.getElementById("scale");输入 = document.getElementById("输入");canvas = document.createElement("canvas");ctx = canvas.getContext("2d");ctx.imageSmoothingEnabled = 假;unscaledImage.onload = onImageLoad;unscaledImage.onerror = onImageError;scale.onmouseup = onScaleChanged;input.oninput = onImageSelected;}}();</脚本></身体></html>使用着色器的另一种更快的方法是将图像添加到设置为使用最近邻过滤的纹理并将其绘制到四边形上.绘制前可以通过 gl.viewport 控制四边形的大小.

<!doctype html><html><头><meta charset="utf-8"><风格>#文件 {显示:无;}身体 {背景颜色:黑色;}身体>* {显示:块;边距顶部:10px;左边距:自动;边距右:自动;}图像{背景颜色:灰色;边框:纯色 1px 白色;边框半径:10px;图像渲染:优化速度;}标签 {过渡:0.1s;光标:指针;文本对齐:居中;字体大小:15px;-webkit-touch-callout:无;-webkit 用户选择:无;-khtml-用户选择:无;-moz 用户选择:无;-ms 用户选择:无;用户选择:无;宽度:130px;高度:40px;行高:40px;边框半径:10px;白颜色;背景颜色:#005500;盒子阴影:0px 4px #555555;}标签:悬停{背景颜色:#007700;}标签:活动{盒子阴影:0px 1px #555555;变换:translateY(3px);}脚本 {显示:无;}</风格></头><身体><img id="unscaledImage"></img><img id="scaledImage"></img><input id="scale" type="range" min="1" max="100" value="50"></input><input id="file" type="file"></input><label for="file">上传图片</label><脚本类型="应用程序/javascript">无效函数(){使用严格";//DOMvar unscaledImage = document.getElementById("unscaledImage");var scaledImage = document.getElementById("scaledImage");var scale = document.getElementById("scale");var file = document.getElementById("file");var imageUploaded = false;函数 onScaleChanged() {如果(图片上传){scaledImage.src = scaleOnGPU(this.value * 0.01);}}函数 onImageLoad() {URL.revokeObjectURL(this.src);上传图像到GPU(这个);scaledImage.src = scaleOnGPU(scale.value * 0.01);scaledImage.style.width = this.width + "px";scaledImage.style.height = this.height + "px";图片上传=真;}函数 onImageError() {URL.revokeObjectURL(this.src);}函数 onImageSubmitted() {如果(this.files[0]){unscaledImage.src = URL.createObjectURL(this.files[0]);}}//总帐var canvas = document.createElement("canvas");var gl = canvas.getContext("webgl",{ preserveDrawingBuffer: true })变种程序=空;变量缓冲区=空;var纹理=空;函数uploadImageToGPU(img) {gl.texImage2D(gl.TEXTURE_2D,0,gl.RGBA,gl.RGBA,gl.UNSIGNED_BYTE,img);}函数 scaleOnGPU(scale) {canvas.width = (unscaledImage.width * 比例) |0;canvas.height = (unscaledImage.height * scale) |0;gl.viewport(0,0,canvas.width,canvas.height);gl.drawArrays(gl.TRIANGLES,0,6);返回画布.toDataURL();}//入口点加载=函数(){//DOM 设置unscaledImage.onload = onImageLoad;unscaledImage.onerror = onImageError;scale.onmouseup = onScaleChanged;file.oninput = onImageSubmitted;//总帐设置//程序(着色器)var vertexShader = gl.createShader(gl.VERTEX_SHADER);var fragmentShader = gl.createShader(gl.FRAGMENT_SHADER);程序 = gl.createProgram();gl.shaderSource(顶点着色器,`精度 mediump 浮点数;属性 vec2 aPosition;属性vec2 aUV;不同的 vec2 vUV;无效的主要(){vUV = aUV;gl_Position = vec4(aPosition,0.0,1.0);}`);gl.shaderSource(片段着色器,`精度 mediump 浮点数;不同的 vec2 vUV;统一的sampler2D uTexture;无效的主要(){gl_FragColor = texture2D(uTexture,vUV);}`);gl.compileShader(vertexShader);gl.compileShader(fragmentShader);gl.attachShader(程序,顶点着色器);gl.attachShader(程序,fragmentShader);gl.linkProgram(程序);gl.deleteShader(vertexShader);gl.deleteShader(fragmentShader);gl.useProgram(程序);//缓冲缓冲区 = gl.createBuffer();gl.bindBuffer(gl.ARRAY_BUFFER,buffer);gl.bufferData(gl.ARRAY_BUFFER,new Float32Array([1.0, 1.0, 1.0, 0.0,-1.0, 1.0, 0.0, 0.0,-1.0,-1.0, 0.0, 1.0,1.0, 1.0, 1.0, 0.0,-1.0,-1.0, 0.0, 1.0,1.0,-1.0, 1.0, 1.0]),gl.STATIC_DRAW);gl.vertexAttribPointer(0,2,gl.FLOAT,gl.FALSE,16,0);gl.vertexAttribPointer(1,2,gl.FLOAT,gl.FALSE,16,8);gl.enableVertexAttribArray(0);gl.enableVertexAttribArray(1);//质地纹理 = gl.createTexture();gl.activeTexture(gl.TEXTURE0);gl.bindTexture(gl.TEXTURE_2D,texture);gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_MAG_FILTER,gl.NEAREST);gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_MIN_FILTER,gl.NEAREST);gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_WRAP_S,gl.CLAMP_TO_EDGE);gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_WRAP_T,gl.CLAMP_TO_EDGE);}onunload = 函数() {gl.deleteProgram(程序);gl.deleteBuffer(缓冲区);gl.deleteTexture(纹理);}}();</脚本></身体></html>为了更好地说明这在实际渲染器中的样子,我创建了另一个示例,该示例将场景绘制到低分辨率帧缓冲区,然后将其放大到画布(关键是设置 min & mag过滤到最近的邻居).

<!doctype html><html><头><meta charset="utf-8"><风格>身体 {背景颜色:黑色;}.中央 {显示:块;边距顶部:30px;左边距:自动;边距右:自动;边框:纯色 1px 白色;边框半径:10px;}脚本 {显示:无;}</风格></头><身体><canvas id="canvas" class="center"></canvas><input id="scale" type="range" min="1" max="100" value="100" class="center"></input><脚本类型="应用程序/javascript">无效函数(){使用严格";//DOMvar canvasWidth = 180 <<1个;var canvasHeight = 160 <<1个;var canvas = document.getElementById("canvas");var scale = document.getElementById("scale");函数 onScaleChange() {var scale = this.value * 0.01;内部宽度 = (画布宽度 * 比例) |0;internalHeight = (canvasHeight * scale) |0;gl.uniform1f(uAspectRatio,1.0/(internalWidth/internalHeight));gl.deleteFramebuffer(帧缓冲区);gl.deleteTexture(framebufferTexture);[framebuffer,framebufferTexture] = createFramebuffer(internalWidth,internalHeight);}//总帐var 内部宽度 = 画布宽度;var internalHeight = canvasHeight;var currentCubeAngle = -0.5;var gl = canvas.getContext("webgl",{ preserveDrawingBuffer: true, antialias: false }) ||console.warn("不支持 WebGL.");var cubeProgram = null;//绘制 3D 立方体的着色器var scaleProgram = null;//用于缩放帧的着色器var uAspectRatio = null;//投影矩阵的纵横比var uCubeRotation = null;//立方体程序的统一位置var cubeBuffer = null;//立方体模型(属性)var scaleBuffer = null;//四边形位置 &紫外线var帧缓冲区=空;//渲染目标var framebufferTexture = null;//渲染到的纹理.(立方体就是在这个上面画的)函数 createProgram(vertexCode,fragmentCode) {var vertexShader = gl.createShader(gl.VERTEX_SHADER);var fragmentShader = gl.createShader(gl.FRAGMENT_SHADER);gl.shaderSource(vertexShader,vertexCode);gl.shaderSource(fragmentShader,fragmentCode);gl.compileShader(vertexShader);gl.compileShader(fragmentShader);尝试 {if (!gl.getShaderParameter(vertexShader,gl.COMPILE_STATUS)) { throw "VS: " + gl.getShaderInfoLog(vertexShader);}if (!gl.getShaderParameter(fragmentShader,gl.COMPILE_STATUS)) { throw "FS:" + gl.getShaderInfoLog(fragmentShader);}} 捕捉(错误){gl.deleteShader(vertexShader);gl.deleteShader(fragmentShader);控制台.错误(错误);}var program = gl.createProgram();gl.attachShader(程序,顶点着色器);gl.attachShader(程序,fragmentShader);gl.deleteShader(vertexShader);gl.deleteShader(fragmentShader);gl.linkProgram(程序);退货计划;}函数创建缓冲区(数据){var 缓冲区 = gl.createBuffer();gl.bindBuffer(gl.ARRAY_BUFFER,buffer);gl.bufferData(gl.ARRAY_BUFFER,Float32Array.from(data),gl.STATIC_DRAW);返回缓冲区;}函数createFramebuffer(宽度,高度){var 纹理 = gl.createTexture();gl.bindTexture(gl.TEXTURE_2D,texture);gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_MIN_FILTER,gl.NEAREST);gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_MAG_FILTER,gl.NEAREST);gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_WRAP_S,gl.CLAMP_TO_EDGE);gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_WRAP_T,gl.CLAMP_TO_EDGE);gl.texImage2D(gl.TEXTURE_2D,0,gl.RGBA,宽度,高度,0,gl.RGBA,gl.UNSIGNED_BYTE,null);var _framebuffer = gl.createFramebuffer();gl.bindFramebuffer(gl.FRAMEBUFFER,_framebuffer);gl.framebufferTexture2D(gl.FRAMEBUFFER,gl.COLOR_ATTACHMENT0,gl.TEXTURE_2D,texture,0);gl.bindTexture(gl.TEXTURE_2D,null);gl.bindFramebuffer(gl.FRAMEBUFFER,null);返回[_framebuffer,纹理];}函数循环(){//currentCubeAngle += 0.01;if (currentCubeAngle > 2.0 * Math.PI) {currentCubeAngle = 0.0;}//gl.bindFramebuffer(gl.FRAMEBUFFER,framebuffer);gl.bindTexture(gl.TEXTURE_2D,null);gl.viewport(0,0,internalWidth,internalHeight);gl.useProgram(cubeProgram);gl.uniform1f(uCubeRotation,currentCubeAngle);gl.bindBuffer(gl.ARRAY_BUFFER,cubeBuffer);gl.vertexAttribPointer(0,3,gl.FLOAT,gl.FALSE,36,0);gl.vertexAttribPointer(1,3,gl.FLOAT,gl.FALSE,36,12);gl.vertexAttribPointer(2,3,gl.FLOAT,gl.FALSE,36,24);gl.enableVertexAttribArray(2);gl.clear(gl.COLOR_BUFFER_BIT);gl.drawArrays(gl.TRIANGLES,0,24);gl.bindFramebuffer(gl.FRAMEBUFFER,null);gl.bindTexture(gl.TEXTURE_2D,framebufferTexture);gl.viewport(0,0,canvasWidth,canvasHeight);gl.useProgram(scaleProgram);gl.bindBuffer(gl.ARRAY_BUFFER,scaleBuffer);gl.vertexAttribPointer(0,2,gl.FLOAT,gl.FALSE,16,0);gl.vertexAttribPointer(1,2,gl.FLOAT,gl.FALSE,16,8);gl.disableVertexAttribArray(2);gl.clear(gl.COLOR_BUFFER_BIT);gl.drawArrays(gl.TRIANGLES,0,6);//requestAnimationFrame(循环);}//入口点加载=函数(){//DOM画布宽度 = 画布宽度;canvas.height = canvasHeight;scale.onmouseup = onScaleChange;//总帐gl.clearColor(0.5,0.5,0.5,1.0);gl.enable(gl.CULL_FACE);gl.enableVertexAttribArray(0);gl.enableVertexAttribArray(1);立方体程序 = 创建程序(`精度 mediump 浮点数;常量浮动 LIGHT_ANGLE = 0.5;常量 vec3 LIGHT_DIR = vec3(sin(LIGHT_ANGLE),0.0,cos(LIGHT_ANGLE));常量 mat4 偏移 = mat4(1.0,0.0,0.0,0.0,0.0,1.0,0.0,0.0,0.0,0.0,1.0,0.0,0.0,0.0,-5.0,1.0);常量浮动 FOV = 0.698132;常量浮动 Z_NEAR = 1.0;常量浮动 Z_FAR = 20.0;常量浮动 COT_FOV = 1.0/tan(FOV * 0.5);常量浮动 Z_FACTOR_1 = -(Z_FAR/(Z_FAR - Z_NEAR));常量浮动 Z_FACTOR_2 = -((Z_NEAR * Z_FAR)/(Z_FAR - Z_NEAR));属性 vec3 aPosition;属性 vec3 aNormal;属性 vec3 aColor;改变 vec3 vColor;统一浮动 uAspectRatio;均匀浮动 uRotation;无效的主要(){浮动 s = sin(uRotation);浮动 c = cos(uRotation);mat4 项目 = mat4(COT_FOV * uAspectRatio,0.0,0.0,0.0,0.0,COT_FOV,0.0,0.0,0.0,0.0,Z_FACTOR_1,Z_FACTOR_2,0.0,0.0,-1.0,0.0);mat4 腐烂 = mat4(c ,0.0,-s ,0.0,0.0,1.0,0.0,0.0,s ,0.0,c ,0.0,0.0,0.0,0.0,1.0);vec3 normal = (vec4(aNormal,0.0) * rot).xyz;vColour = aColour * max(0.4,dot(normal,LIGHT_DIR));gl_Position = PROJ * OFFSET * rot * vec4(aPosition,1.0);}`,`精度 mediump 浮点数;改变 vec3 vColor;无效的主要(){gl_FragColor = vec4(vColour,1.0);}`);uAspectRatio = gl.getUniformLocation(cubeProgram,"uAspectRatio");uCubeRotation = gl.getUniformLocation(cubeProgram,"uRotation");gl.useProgram(cubeProgram);gl.uniform1f(uAspectRatio,1.0/(internalWidth/internalHeight));scaleProgram = createProgram(`精度 mediump 浮点数;属性 vec2 aPosition;属性vec2 aUV;不同的 vec2 vUV;无效的主要(){vUV = aUV;gl_Position = vec4(aPosition,0.0,1.0);}`,`精度 mediump 浮点数;不同的 vec2 vUV;统一的sampler2D uTexture;无效的主要(){gl_FragColor = texture2D(uTexture,vUV);}`);立方体缓冲区 = 创建缓冲区([//定位正常颜色//正面1.0, 1.0, 1.0, 0.0, 0.0, 1.0, 0.0,0.0,0.6,-1.0, 1.0, 1.0, 0.0, 0.0, 1.0, 0.0,0.0,0.6,-1.0,-1.0, 1.0, 0.0, 0.0, 1.0, 0.0,0.0,0.6,-1.0,-1.0, 1.0, 0.0, 0.0, 1.0, 0.0,0.0,0.6,1.0,-1.0, 1.0, 0.0, 0.0, 1.0, 0.0,0.0,0.6,1.0, 1.0, 1.0, 0.0, 0.0, 1.0, 0.0,0.0,0.6,//后退-1.0,-1.0,-1.0, 0.0, 0.0,-1.0, 0.0,0.0,0.6,-1.0, 1.0,-1.0, 0.0, 0.0,-1.0, 0.0,0.0,0.6,1.0, 1.0,-1.0, 0.0, 0.0,-1.0, 0.0,0.0,0.6,1.0, 1.0,-1.0, 0.0, 0.0,-1.0, 0.0,0.0,0.6,1.0,-1.0,-1.0, 0.0, 0.0,-1.0, 0.0,0.0,0.6,-1.0,-1.0,-1.0, 0.0, 0.0,-1.0, 0.0,0.0,0.6,//剩下-1.0, 1.0, 1.0, 1.0, 0.0, 0.0, 0.0,0.0,0.6,-1.0,-1.0,-1.0, 1.0, 0.0, 0.0, 0.0,0.0,0.6,-1.0,-1.0, 1.0, 1.0, 0.0, 0.0, 0.0,0.0,0.6,-1.0, 1.0, 1.0, 1.0, 0.0, 0.0, 0.0,0.0,0.6,-1.0, 1.0,-1.0, 1.0, 0.0, 0.0, 0.0,0.0,0.6,-1.0,-1.0,-1.0, 1.0, 0.0, 0.0, 0.0,0.0,0.6,//对1.0,-1.0, 1.0, -1.0, 0.0, 0.0, 0.0,0.0,0.6,1.0,-1.0,-1.0, -1.0, 0.0, 0.0, 0.0,0.0,0.6,1.0, 1.0, 1.0, -1.0, 0.0, 0.0, 0.0,0.0,0.6,1.0,-1.0,-1.0, -1.0, 0.0, 0.0, 0.0,0.0,0.6,1.0, 1.0,-1.0, -1.0, 0.0, 0.0, 0.0,0.0,0.6,1.0, 1.0, 1.0, -1.0, 0.0, 0.0, 0.0,0.0,0.6]);scaleBuffer = createBuffer([//定位UV1.0, 1.0, 1.0,1.0,-1.0, 1.0, 0.0,1.0,-1.0,-1.0, 0.0,0.0,1.0, 1.0, 1.0,1.0,-1.0,-1.0, 0.0,0.0,1.0,-1.0, 1.0,0.0]);[framebuffer,framebufferTexture] = createFramebuffer(internalWidth,internalHeight);环形();}//退出点onunload = 函数() {gl.deleteProgram(cubeProgram);gl.deleteProgram(scaleProgram);gl.deleteBuffer(cubeBuffer);gl.deleteBuffer(scaleBuffer);gl.deleteFramebuffer(帧缓冲区);gl.deleteTexture(framebufferTexture);}}();</脚本></身体></html>I'm kinda stumped about this seemingly simple task of upscaling a canvas render in nearest neighbor format, which I asked here:

How can I properly write this shader function in JS?

The goal being to convert a 3D render output like this:

to pixelart like this:

But in that question I'm asking how to implement my chosen solution (using essentially a shader to handle the up-scaling) correctly. Perhaps instead I should ask: Is there a simpler (and still performant) way to do this?

I can offer two approaches that can both efficiently scale an image up or down using nearest neighbor.

To do it manually, you should iterate through each pixel of your new scaled image and calculate what pixel from the original they should use using ratios of the old size compared to the new size.

(My code snippets use .toDataURL() so they may not work in chrome.)

<!doctype html>

<html>

<head>

<meta charset="utf-8">

<style>

#input {

display: none;

}

body {

background-color: black;

}

body > * {

display: block;

margin-top: 10px;

margin-left: auto;

margin-right: auto;

}

img {

background-color: gray;

border: solid 1px white;

border-radius: 10px;

image-rendering: optimizeSpeed;

}

label {

transition: 0.1s;

cursor: pointer;

text-align: center;

font-size: 15px;

-webkit-touch-callout: none;

-webkit-user-select: none;

-khtml-user-select: none;

-moz-user-select: none;

-ms-user-select: none;

user-select: none;

width: 130px;

height: 40px;

line-height: 40px;

border-radius: 10px;

color: white;

background-color: #005500;

box-shadow: 0px 4px #555555;

}

label:hover {

background-color: #007700;

}

label:active {

box-shadow: 0px 1px #555555;

transform: translateY(3px);

}

script {

display: none;

}

</style>

</head>

<body>

<img id="unscaledImage"></img>

<img id="scaledImage"></img>

<input id="scale" type="range" min="1" max="100" value="50"></input>

<label for="input">Upload Image</label>

<input id="input" type="file"></input>

<script type="application/javascript">

void function() {

"use strict";

var unscaledImage = null;

var scaledImage = null;

var scale = null;

var input = null;

var canvas = null;

var ctx = null;

var hasImage = false;

function scaleImage(img,scale) {

var newWidth = (img.width * scale) | 0;

var newHeight = (img.height * scale) | 0;

canvas.width = img.width;

canvas.height = img.height;

ctx.drawImage(img,0,0);

var unscaledData = ctx.getImageData(0,0,img.width,img.height);

var scaledData = ctx.createImageData(newWidth,newHeight);

var unscaledBitmap = unscaledData.data;

var scaledBitmap = scaledData.data;

var xScale = img.width / newWidth;

var yScale = img.height / newHeight;

for (var x = 0; x < newWidth; ++x) {

for (var y = 0; y < newHeight; ++y) {

var _x = (x * xScale) | 0;

var _y = (y * yScale) | 0;

var scaledIndex = (x + y * newWidth) * 4;

var unscaledIndex = (_x + _y * img.width) * 4;

scaledBitmap[scaledIndex] = unscaledBitmap[unscaledIndex];

scaledBitmap[scaledIndex + 1] = unscaledBitmap[unscaledIndex + 1];

scaledBitmap[scaledIndex + 2] = unscaledBitmap[unscaledIndex + 2];

scaledBitmap[scaledIndex + 3] = 255;

}

}

ctx.clearRect(0,0,canvas.width,canvas.height);

canvas.width = newWidth;

canvas.height = newHeight;

ctx.putImageData(scaledData,0,0);

return canvas.toDataURL();

}

function onImageLoad() {

URL.revokeObjectURL(this.src);

scaledImage.src = scaleImage(this,scale.value * 0.01);

scaledImage.style.width = this.width + "px";

scaledImage.style.height = this.height + "px";

hasImage = true;

}

function onImageError() {

URL.revokeObjectURL(this.src);

}

function onScaleChanged() {

if (hasImage) {

scaledImage.src = scaleImage(unscaledImage,this.value * 0.01);

}

}

function onImageSelected() {

if (this.files[0]) {

unscaledImage.src = URL.createObjectURL(this.files[0]);

}

}

onload = function() {

unscaledImage = document.getElementById("unscaledImage");

scaledImage = document.getElementById("scaledImage");

scale = document.getElementById("scale");

input = document.getElementById("input");

canvas = document.createElement("canvas");

ctx = canvas.getContext("2d");

ctx.imageSmoothingEnabled = false;

unscaledImage.onload = onImageLoad;

unscaledImage.onerror = onImageError;

scale.onmouseup = onScaleChanged;

input.oninput = onImageSelected;

}

}();

</script>

</body>

</html>

Alternatively a much faster way using shaders, is to add your image to a texture that is set to use nearest neighbor filtering and draw it onto a quad. The size of the quad can be controlled via gl.viewport before drawing.

<!doctype html>

<html>

<head>

<meta charset="utf-8">

<style>

#file {

display: none;

}

body {

background-color: black;

}

body > * {

display: block;

margin-top: 10px;

margin-left: auto;

margin-right: auto;

}

img {

background-color: gray;

border: solid 1px white;

border-radius: 10px;

image-rendering: optimizeSpeed;

}

label {

transition: 0.1s;

cursor: pointer;

text-align: center;

font-size: 15px;

-webkit-touch-callout: none;

-webkit-user-select: none;

-khtml-user-select: none;

-moz-user-select: none;

-ms-user-select: none;

user-select: none;

width: 130px;

height: 40px;

line-height: 40px;

border-radius: 10px;

color: white;

background-color: #005500;

box-shadow: 0px 4px #555555;

}

label:hover {

background-color: #007700;

}

label:active {

box-shadow: 0px 1px #555555;

transform: translateY(3px);

}

script {

display: none;

}

</style>

</head>

<body>

<img id="unscaledImage"></img>

<img id="scaledImage"></img>

<input id="scale" type="range" min="1" max="100" value="50"></input>

<input id="file" type="file"></input>

<label for="file">Upload Image</label>

<script type="application/javascript">

void function() {

"use strict";

// DOM

var unscaledImage = document.getElementById("unscaledImage");

var scaledImage = document.getElementById("scaledImage");

var scale = document.getElementById("scale");

var file = document.getElementById("file");

var imageUploaded = false;

function onScaleChanged() {

if (imageUploaded) {

scaledImage.src = scaleOnGPU(this.value * 0.01);

}

}

function onImageLoad() {

URL.revokeObjectURL(this.src);

uploadImageToGPU(this);

scaledImage.src = scaleOnGPU(scale.value * 0.01);

scaledImage.style.width = this.width + "px";

scaledImage.style.height = this.height + "px";

imageUploaded = true;

}

function onImageError() {

URL.revokeObjectURL(this.src);

}

function onImageSubmitted() {

if (this.files[0]) {

unscaledImage.src = URL.createObjectURL(this.files[0]);

}

}

// GL

var canvas = document.createElement("canvas");

var gl = canvas.getContext("webgl",{ preserveDrawingBuffer: true })

var program = null;

var buffer = null;

var texture = null;

function uploadImageToGPU(img) {

gl.texImage2D(gl.TEXTURE_2D,0,gl.RGBA,gl.RGBA,gl.UNSIGNED_BYTE,img);

}

function scaleOnGPU(scale) {

canvas.width = (unscaledImage.width * scale) | 0;

canvas.height = (unscaledImage.height * scale) | 0;

gl.viewport(0,0,canvas.width,canvas.height);

gl.drawArrays(gl.TRIANGLES,0,6);

return canvas.toDataURL();

}

// Entry point

onload = function() {

// DOM setup

unscaledImage.onload = onImageLoad;

unscaledImage.onerror = onImageError;

scale.onmouseup = onScaleChanged;

file.oninput = onImageSubmitted;

// GL setup

// Program (shaders)

var vertexShader = gl.createShader(gl.VERTEX_SHADER);

var fragmentShader = gl.createShader(gl.FRAGMENT_SHADER);

program = gl.createProgram();

gl.shaderSource(vertexShader,`

precision mediump float;

attribute vec2 aPosition;

attribute vec2 aUV;

varying vec2 vUV;

void main() {

vUV = aUV;

gl_Position = vec4(aPosition,0.0,1.0);

}

`);

gl.shaderSource(fragmentShader,`

precision mediump float;

varying vec2 vUV;

uniform sampler2D uTexture;

void main() {

gl_FragColor = texture2D(uTexture,vUV);

}

`);

gl.compileShader(vertexShader);

gl.compileShader(fragmentShader);

gl.attachShader(program,vertexShader);

gl.attachShader(program,fragmentShader);

gl.linkProgram(program);

gl.deleteShader(vertexShader);

gl.deleteShader(fragmentShader);

gl.useProgram(program);

// Buffer

buffer = gl.createBuffer();

gl.bindBuffer(gl.ARRAY_BUFFER,buffer);

gl.bufferData(gl.ARRAY_BUFFER,new Float32Array([

1.0, 1.0, 1.0, 0.0,

-1.0, 1.0, 0.0, 0.0,

-1.0,-1.0, 0.0, 1.0,

1.0, 1.0, 1.0, 0.0,

-1.0,-1.0, 0.0, 1.0,

1.0,-1.0, 1.0, 1.0

]),gl.STATIC_DRAW);

gl.vertexAttribPointer(0,2,gl.FLOAT,gl.FALSE,16,0);

gl.vertexAttribPointer(1,2,gl.FLOAT,gl.FALSE,16,8);

gl.enableVertexAttribArray(0);

gl.enableVertexAttribArray(1);

// Texture

texture = gl.createTexture();

gl.activeTexture(gl.TEXTURE0);

gl.bindTexture(gl.TEXTURE_2D,texture);

gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_MAG_FILTER,gl.NEAREST);

gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_MIN_FILTER,gl.NEAREST);

gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_WRAP_S,gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_WRAP_T,gl.CLAMP_TO_EDGE);

}

onunload = function() {

gl.deleteProgram(program);

gl.deleteBuffer(buffer);

gl.deleteTexture(texture);

}

}();

</script>

</body>

</html>

Edit: To give better clarification on what this could look like in an actual renderer, I've created another example that draws a scene to a low resolution frame buffer and then scales it up to the canvas (The key is to set the min & mag filter to nearest neighbor).

<!doctype html>

<html>

<head>

<meta charset="utf-8">

<style>

body {

background-color: black;

}

.center {

display: block;

margin-top: 30px;

margin-left: auto;

margin-right: auto;

border: solid 1px white;

border-radius: 10px;

}

script {

display: none;

}

</style>

</head>

<body>

<canvas id="canvas" class="center"></canvas>

<input id="scale" type="range" min="1" max="100" value="100" class="center"></input>

<script type="application/javascript">

void function() {

"use strict";

// DOM

var canvasWidth = 180 << 1;

var canvasHeight = 160 << 1;

var canvas = document.getElementById("canvas");

var scale = document.getElementById("scale");

function onScaleChange() {

var scale = this.value * 0.01;

internalWidth = (canvasWidth * scale) | 0;

internalHeight = (canvasHeight * scale) | 0;

gl.uniform1f(uAspectRatio,1.0 / (internalWidth / internalHeight));

gl.deleteFramebuffer(framebuffer);

gl.deleteTexture(framebufferTexture);

[framebuffer,framebufferTexture] = createFramebuffer(internalWidth,internalHeight);

}

// GL

var internalWidth = canvasWidth;

var internalHeight = canvasHeight;

var currentCubeAngle = -0.5;

var gl = canvas.getContext("webgl",{ preserveDrawingBuffer: true, antialias: false }) || console.warn("WebGL Not Supported.");

var cubeProgram = null; // Shaders to draw 3D cube

var scaleProgram = null; // Shaders to scale the frame

var uAspectRatio = null; // Aspect ratio for projection matrix

var uCubeRotation = null; // uniform location for cube program

var cubeBuffer = null; // cube model (attributes)

var scaleBuffer = null; // quad position & UV's

var framebuffer = null; // render target

var framebufferTexture = null; // textured that is rendered to. (The cube is drawn on this)

function createProgram(vertexCode,fragmentCode) {

var vertexShader = gl.createShader(gl.VERTEX_SHADER);

var fragmentShader = gl.createShader(gl.FRAGMENT_SHADER);

gl.shaderSource(vertexShader,vertexCode);

gl.shaderSource(fragmentShader,fragmentCode);

gl.compileShader(vertexShader);

gl.compileShader(fragmentShader);

try {

if (!gl.getShaderParameter(vertexShader,gl.COMPILE_STATUS)) { throw "VS: " + gl.getShaderInfoLog(vertexShader); }

if (!gl.getShaderParameter(fragmentShader,gl.COMPILE_STATUS)) { throw "FS: " + gl.getShaderInfoLog(fragmentShader); }

} catch(error) {

gl.deleteShader(vertexShader);

gl.deleteShader(fragmentShader);

console.error(error);

}

var program = gl.createProgram();

gl.attachShader(program,vertexShader);

gl.attachShader(program,fragmentShader);

gl.deleteShader(vertexShader);

gl.deleteShader(fragmentShader);

gl.linkProgram(program);

return program;

}

function createBuffer(data) {

var buffer = gl.createBuffer();

gl.bindBuffer(gl.ARRAY_BUFFER,buffer);

gl.bufferData(gl.ARRAY_BUFFER,Float32Array.from(data),gl.STATIC_DRAW);

return buffer;

}

function createFramebuffer(width,height) {

var texture = gl.createTexture();

gl.bindTexture(gl.TEXTURE_2D,texture);

gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_MIN_FILTER,gl.NEAREST);

gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_MAG_FILTER,gl.NEAREST);

gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_WRAP_S,gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_2D,gl.TEXTURE_WRAP_T,gl.CLAMP_TO_EDGE);

gl.texImage2D(gl.TEXTURE_2D,0,gl.RGBA,width,height,0,gl.RGBA,gl.UNSIGNED_BYTE,null);

var _framebuffer = gl.createFramebuffer();

gl.bindFramebuffer(gl.FRAMEBUFFER,_framebuffer);

gl.framebufferTexture2D(gl.FRAMEBUFFER,gl.COLOR_ATTACHMENT0,gl.TEXTURE_2D,texture,0);

gl.bindTexture(gl.TEXTURE_2D,null);

gl.bindFramebuffer(gl.FRAMEBUFFER,null);

return [_framebuffer,texture];

}

function loop() {

//

currentCubeAngle += 0.01;

if (currentCubeAngle > 2.0 * Math.PI) {

currentCubeAngle = 0.0;

}

//

gl.bindFramebuffer(gl.FRAMEBUFFER,framebuffer);

gl.bindTexture(gl.TEXTURE_2D,null);

gl.viewport(0,0,internalWidth,internalHeight);

gl.useProgram(cubeProgram);

gl.uniform1f(uCubeRotation,currentCubeAngle);

gl.bindBuffer(gl.ARRAY_BUFFER,cubeBuffer);

gl.vertexAttribPointer(0,3,gl.FLOAT,gl.FALSE,36,0);

gl.vertexAttribPointer(1,3,gl.FLOAT,gl.FALSE,36,12);

gl.vertexAttribPointer(2,3,gl.FLOAT,gl.FALSE,36,24);

gl.enableVertexAttribArray(2);

gl.clear(gl.COLOR_BUFFER_BIT);

gl.drawArrays(gl.TRIANGLES,0,24);

gl.bindFramebuffer(gl.FRAMEBUFFER,null);

gl.bindTexture(gl.TEXTURE_2D,framebufferTexture);

gl.viewport(0,0,canvasWidth,canvasHeight);

gl.useProgram(scaleProgram);

gl.bindBuffer(gl.ARRAY_BUFFER,scaleBuffer);

gl.vertexAttribPointer(0,2,gl.FLOAT,gl.FALSE,16,0);

gl.vertexAttribPointer(1,2,gl.FLOAT,gl.FALSE,16,8);

gl.disableVertexAttribArray(2);

gl.clear(gl.COLOR_BUFFER_BIT);

gl.drawArrays(gl.TRIANGLES,0,6);

//

requestAnimationFrame(loop);

}

// Entry Point

onload = function() {

// DOM

canvas.width = canvasWidth;

canvas.height = canvasHeight;

scale.onmouseup = onScaleChange;

// GL

gl.clearColor(0.5,0.5,0.5,1.0);

gl.enable(gl.CULL_FACE);

gl.enableVertexAttribArray(0);

gl.enableVertexAttribArray(1);

cubeProgram = createProgram(`

precision mediump float;

const float LIGHT_ANGLE = 0.5;

const vec3 LIGHT_DIR = vec3(sin(LIGHT_ANGLE),0.0,cos(LIGHT_ANGLE));

const mat4 OFFSET = mat4(

1.0,0.0,0.0,0.0,

0.0,1.0,0.0,0.0,

0.0,0.0,1.0,0.0,

0.0,0.0,-5.0,1.0

);

const float FOV = 0.698132;

const float Z_NEAR = 1.0;

const float Z_FAR = 20.0;

const float COT_FOV = 1.0 / tan(FOV * 0.5);

const float Z_FACTOR_1 = -(Z_FAR / (Z_FAR - Z_NEAR));

const float Z_FACTOR_2 = -((Z_NEAR * Z_FAR) / (Z_FAR - Z_NEAR));

attribute vec3 aPosition;

attribute vec3 aNormal;

attribute vec3 aColour;

varying vec3 vColour;

uniform float uAspectRatio;

uniform float uRotation;

void main() {

float s = sin(uRotation);

float c = cos(uRotation);

mat4 PROJ = mat4(

COT_FOV * uAspectRatio,0.0,0.0,0.0,

0.0,COT_FOV,0.0,0.0,

0.0,0.0,Z_FACTOR_1,Z_FACTOR_2,

0.0,0.0,-1.0,0.0

);

mat4 rot = mat4(

c ,0.0,-s ,0.0,

0.0,1.0,0.0,0.0,

s ,0.0,c ,0.0,

0.0,0.0,0.0,1.0

);

vec3 normal = (vec4(aNormal,0.0) * rot).xyz;

vColour = aColour * max(0.4,dot(normal,LIGHT_DIR));

gl_Position = PROJ * OFFSET * rot * vec4(aPosition,1.0);

}

`,`

precision mediump float;

varying vec3 vColour;

void main() {

gl_FragColor = vec4(vColour,1.0);

}

`);

uAspectRatio = gl.getUniformLocation(cubeProgram,"uAspectRatio");

uCubeRotation = gl.getUniformLocation(cubeProgram,"uRotation");

gl.useProgram(cubeProgram);

gl.uniform1f(uAspectRatio,1.0 / (internalWidth / internalHeight));

scaleProgram = createProgram(`

precision mediump float;

attribute vec2 aPosition;

attribute vec2 aUV;

varying vec2 vUV;

void main() {

vUV = aUV;

gl_Position = vec4(aPosition,0.0,1.0);

}

`,`

precision mediump float;

varying vec2 vUV;

uniform sampler2D uTexture;

void main() {

gl_FragColor = texture2D(uTexture,vUV);

}

`);

cubeBuffer = createBuffer([

// Position Normal Colour

// Front

1.0, 1.0, 1.0, 0.0, 0.0, 1.0, 0.0,0.0,0.6,

-1.0, 1.0, 1.0, 0.0, 0.0, 1.0, 0.0,0.0,0.6,

-1.0,-1.0, 1.0, 0.0, 0.0, 1.0, 0.0,0.0,0.6,

-1.0,-1.0, 1.0, 0.0, 0.0, 1.0, 0.0,0.0,0.6,

1.0,-1.0, 1.0, 0.0, 0.0, 1.0, 0.0,0.0,0.6,

1.0, 1.0, 1.0, 0.0, 0.0, 1.0, 0.0,0.0,0.6,

// Back

-1.0,-1.0,-1.0, 0.0, 0.0,-1.0, 0.0,0.0,0.6,

-1.0, 1.0,-1.0, 0.0, 0.0,-1.0, 0.0,0.0,0.6,

1.0, 1.0,-1.0, 0.0, 0.0,-1.0, 0.0,0.0,0.6,

1.0, 1.0,-1.0, 0.0, 0.0,-1.0, 0.0,0.0,0.6,

1.0,-1.0,-1.0, 0.0, 0.0,-1.0, 0.0,0.0,0.6,

-1.0,-1.0,-1.0, 0.0, 0.0,-1.0, 0.0,0.0,0.6,

// Left

-1.0, 1.0, 1.0, 1.0, 0.0, 0.0, 0.0,0.0,0.6,

-1.0,-1.0,-1.0, 1.0, 0.0, 0.0, 0.0,0.0,0.6,

-1.0,-1.0, 1.0, 1.0, 0.0, 0.0, 0.0,0.0,0.6,

-1.0, 1.0, 1.0, 1.0, 0.0, 0.0, 0.0,0.0,0.6,

-1.0, 1.0,-1.0, 1.0, 0.0, 0.0, 0.0,0.0,0.6,

-1.0,-1.0,-1.0, 1.0, 0.0, 0.0, 0.0,0.0,0.6,

// Right

1.0,-1.0, 1.0, -1.0, 0.0, 0.0, 0.0,0.0,0.6,

1.0,-1.0,-1.0, -1.0, 0.0, 0.0, 0.0,0.0,0.6,

1.0, 1.0, 1.0, -1.0, 0.0, 0.0, 0.0,0.0,0.6,

1.0,-1.0,-1.0, -1.0, 0.0, 0.0, 0.0,0.0,0.6,

1.0, 1.0,-1.0, -1.0, 0.0, 0.0, 0.0,0.0,0.6,

1.0, 1.0, 1.0, -1.0, 0.0, 0.0, 0.0,0.0,0.6

]);

scaleBuffer = createBuffer([

// Position UV

1.0, 1.0, 1.0,1.0,

-1.0, 1.0, 0.0,1.0,

-1.0,-1.0, 0.0,0.0,

1.0, 1.0, 1.0,1.0,

-1.0,-1.0, 0.0,0.0,

1.0,-1.0, 1.0,0.0

]);

[framebuffer,framebufferTexture] = createFramebuffer(internalWidth,internalHeight);

loop();

}

// Exit point

onunload = function() {

gl.deleteProgram(cubeProgram);

gl.deleteProgram(scaleProgram);

gl.deleteBuffer(cubeBuffer);

gl.deleteBuffer(scaleBuffer);

gl.deleteFramebuffer(framebuffer);

gl.deleteTexture(framebufferTexture);

}

}();

</script>

</body>

</html>

这篇关于有没有一种更简单(并且仍然有效)的方法来通过最近邻重采样来放大画布渲染?的文章就介绍到这了,希望我们推荐的答案对大家有所帮助,也希望大家多多支持跟版网!

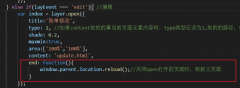

layer.open打开的页面关闭时,父页面刷新的方法layer.open打开的页面关闭时,父页面刷新的方法,在layer.open中添加: end: function(){ window.parent.location.reload();//关闭open打开的页面时,刷新父页面 }

layer.open打开的页面关闭时,父页面刷新的方法layer.open打开的页面关闭时,父页面刷新的方法,在layer.open中添加: end: function(){ window.parent.location.reload();//关闭open打开的页面时,刷新父页面 }